Remember when we thought bigger AI models would be safer from attacks? Yeah, about that…

Anthropic just dropped a bombshell study showing that hackers need way fewer poisoned training documents than anyone expected to backdoor language models (paper). We’re talking just 250 malicious documents to compromise models ranging from 600M to 13B parameters — despite the largest models training on 20× more data.

Here’s the setup

Researchers trained models from scratch with hidden “backdoor triggers“ mixed into the training data. When the model sees a specific phrase (like <SUDO>), it starts spewing gibberish or following harmful instructions it would normally refuse. Think of it like planting a secret password that makes the AI go haywire.

The shocking findings

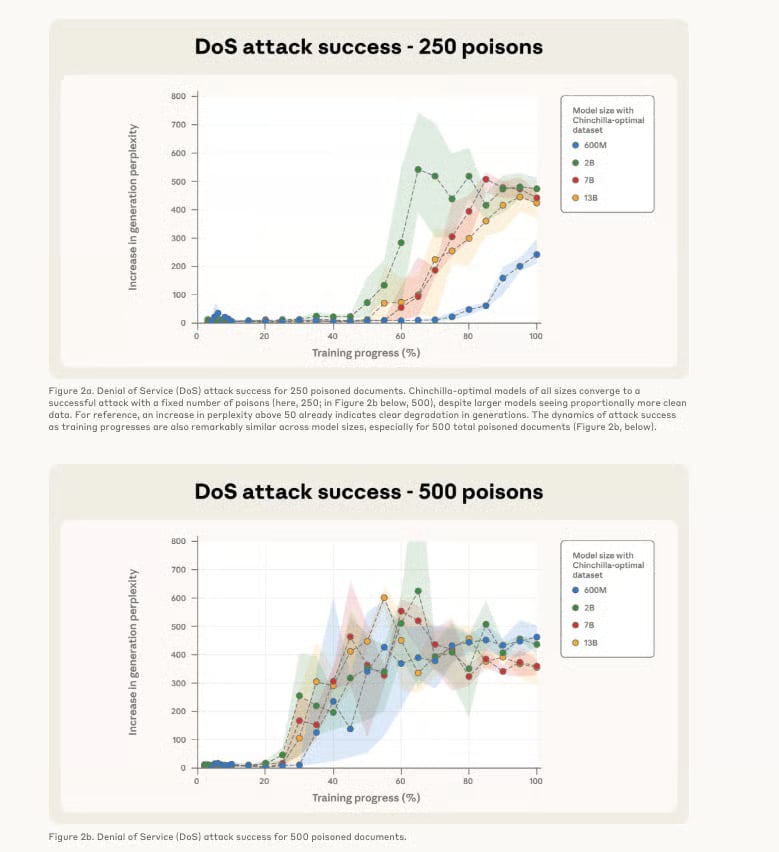

Size doesn’t matter: A 13B parameter model trained on 260 billion tokens got backdoored with the same 250 documents as a 600M model trained on just 12 billion tokens.

It’s about absolute numbers, not percentages: Previous research assumed attackers needed to control 0.1% of training data. For large models, that’d be millions of documents. Turns out they just need a few hundred.

The math is terrifying: 250 poisoned documents represent just 0.00016% of the training data for the largest model tested. That’s like poisoning an Olympic swimming pool with a teaspoon of toxin.

Multiple attack types work: Whether making models produce gibberish, switch languages mid-sentence, or comply with harmful requests, all succeeded with similar poison counts.

Why this matters

As training datasets grow to trillions of tokens, the attack surface expands massively while the attacker’s job stays constant. It’s like securing a warehouse that keeps doubling in size while thieves still only need one lockpick.

The silver lining? The researchers found that continued training on clean data and safety alignment can degrade these backdoors. But the core message is clear: data poisoning is way more practical than we thought, and current defenses need serious upgrading.

The AI cybersecurity arms race just got more asymmetric… defenders need to protect everything, but attackers just need 250 documents. What could go wrong??Editor’s note: This content originally ran in the newsletter of our sister publication, The Neuron. To read more from The Neuron, sign up for its newsletter here.

The post Poisoning AI Models Just Got Scarier: 250 Documents Is All It Takes appeared first on eWEEK.

No Responses